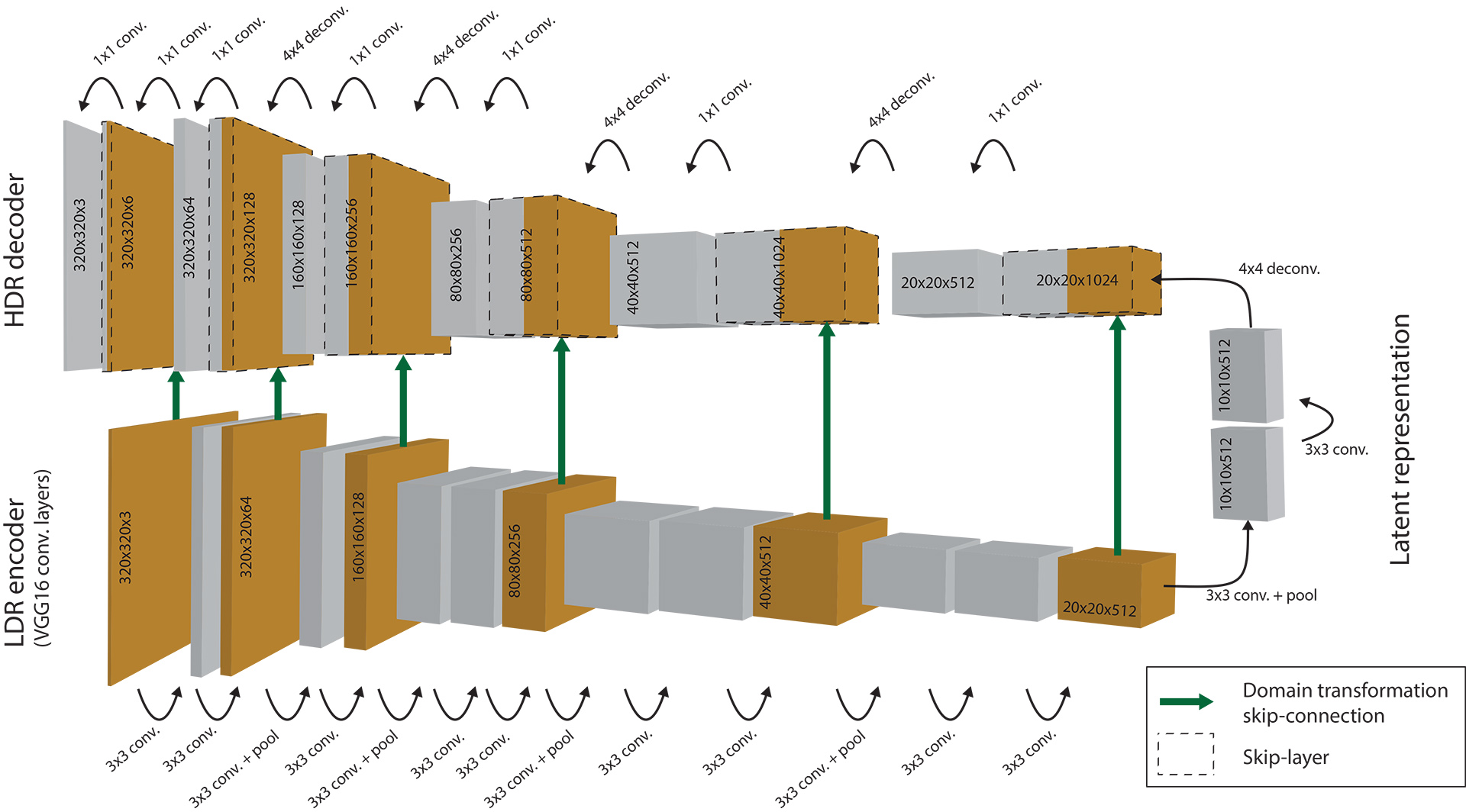

For training, we first gather data from a large set of existing HDR image sources in order to create a training dataset. For each HDR image we then simulate a set of corresponding LDR exposures using a virtual camera model. The network weights are optimized over the dataset by minimizing a custom HDR loss function. As the amount of available HDR content is still limited we utilize transfer-learning, where the weights are pre-trained on a large set of simulated HDR images, created from a subset of the MIT Places database.

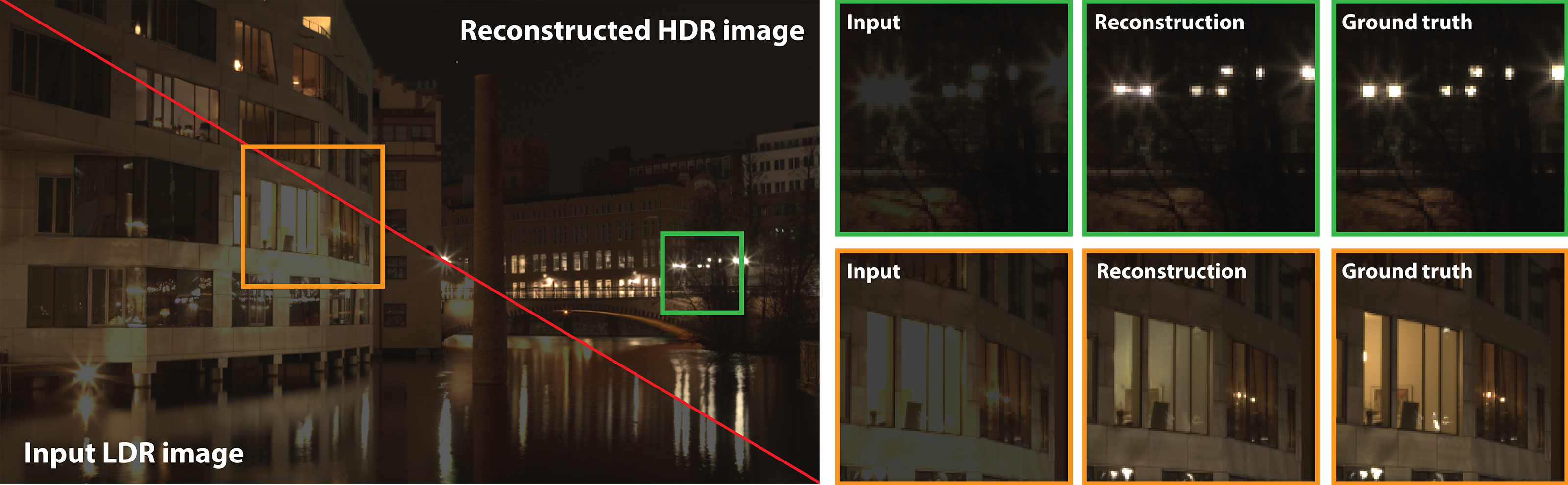

Expansion of LDR images for HDR applications is commonly referred to as inverse tone-mapping (iTM). Most existing inverse tone-mapping operators (iTMOs) are not very successful in reconstruction of saturated pixels. They focus on boosting the dynamic range to look plausible on an HDR display, or to produce rough estimates needed for image based lighting (IBL). The proposed method demonstrates a step improvement in the quality of reconstruction, in which the structures and shapes in the saturated regions are recovered. It offers a range of new applications, such as exposure correction, tone-mapping, or glare simulation.

For details on the method, we refer to the paper. Additional results are also available in the supplementary document. The complete testset with reconstructions is also provided. In order to perform reconstruction of arbitrary LDR images, souce code can be found on GitHub. All the downloads are listed below.

| Download | Size | Description |

|---|---|---|

Paper, small Paper, small |

12.6Mb | Paper with JPEG compressed figures. |

Paper, large Paper, large |

53.4Mb | Paper with no image compression applied. |

Supplementary document Supplementary document |

11.5Mb | Supporting document with additional figures for complementing the figures in the paper, aswell as specification of HDR image sources used for training. |

Presentation Presentation |

95.7Mb | Presentation from Siggraph Asia 2017, Bangkok. |

Video Video |

107Mb | The video overview presented above. |

Testset reconstructions Testset reconstructions |

581Mb | A zipped archive of the 96 images in the testset, together with corresponding HDR reconstructions in OpenEXR format. There are also JPGs with example exposures for easy comparison. These examples can be viewed with the accompanying HTML gallery. The gallery is also directly accessible here. |

Source code Source code |

- | GitHub project with Python scripts for inference and training, implementing the autoencoder CNN using Tensorflow. The code is accompanied with trained parameters. |

@article{EKDMU17,

author = "Eilertsen, Gabriel and

Kronander, Joel, and

Denes, Gyorgy and

Mantiuk, Rafa\l and

Unger, Jonas",

title = "HDR image reconstruction from a single

exposure using deep CNNs",

journal = "ACM Transactions on Graphics (TOG)",

number = "6",

volume = "36",

articleno = "178",

year = "2017"

}