|

LiU HDRv Repository - IBL |

Image based lighting

Image based lighting (IBL) is one of the key applications of HDR imaging (What is IBL?). Here, we display how IBL can be extended through the use of HDRv sequences. The benefit of using HDRv sequences instead of single HDR images (as in ordinary IBL) is that HDRv makes it possible to capture time varying lighting conditions or spatial variations in the scene illumination. It is also possible to use computer vision algorithms to model and reconstruct the captured scene with detailed information describing both the direct light sources and the background illumination in full HDR.

The video displays example renderings from two scenes captured in Norrköping, Sweden.

The HDRv setup used (see below) enables the lighting from the real world scene to be captured at

the position of the virtual helicopter in each frame.

A drawback of the method used in the video above is that the illumination used in each frame only is captured at a

single point in space (at the position of the mirror sphere). This means that the technique does not capture

how the incident lighting varies from position to position over the helicopter body. In many cases it is therefore

necessary to model or reconstruct the 3D scene and extract the direct ligth sources and geometries that cast shadows.

The image below displays an example scene that has been captured, reconstructed, and populated with virtual furnitures

(click images for high resolution versions).

Our IBL pipeline for capture and modeling of the lighting taking into account also complex spatial variations in the scene illumination is described in the (open access) Computers & Graphics publication: Spatially varying image based lighting using HDR-video, the EUSIPCO 2013 paper Temporally and Spatially Varying Image Based Lighting using HDR-Video, and in this thesis.

The image above displays a comparison between traditional IBL using a single light probe (left) and using a lighting environment including 3D geometry that was built from a large number of HDR light probe images captured from an HDRv sequence (right). It is evident that the traditional IBL method cannot capture the spatial variations in the scene illumination. This can be seen from the shadow cast by the window and reflection of the light sources in the photograph on the wall behind the sofa. The scene was modeled by Sören Larsson, RayLab.

Scene and illumination capture for IBL

The IBL scene capture is based on a 4 Mpixel global shutter HDRv camera with a dynamic range of more than 10.000.000 : 1 at 30 fps. The image displays the HDRv camera in a real-time light probe (RTLP) setup. The camera is capturing the environment through the reflection in a mirror sphere. This yields a close to 360 degree panorama at each capture position.

A scene is usually captured using a combination of panoramic light

probe sequences, and sequences with a smaller field of view to maximize the resolution at regions of

special interest in the scene. The panoramic sequences ensure full angular coverage at each position

and guarantee that the information required for IBL is captured. The system yields high resolution HDR environment

maps that can be directly used for IBL rendering or scene reconstruction.

The image below displays an older RTLP-system developed in a collaboration with the camera manufacturer SICK-IVP.

The first version, implemented already in 2003, was capable of capturing images with dynamic range of 10.000.000 : 1 and a resolution

of 960 x 512 pixels at 25 frames per second. The 2004 Eurographics short paper:

A Real Time Light Probe (6.3 MB .pdf) gives an in depth overview of the implementation details of this imaging system.

This imaging system has been used in a number of projects directed towards capturing, processing and rendering with spatially

varying lighting conditions. We call this extension of IBL: Incident Light Fields (ILF). This was first presented in the EGSR 2003 paper

Capture, processing and rendering with incident light fields (3.1 MB .pdf), and later extended

to include unrestricted capture, more efficient storage and rendering, as well as extraction and editing of the light sources in the scene in the EGSR 2008 paper

Free form incident light fields (3.1 MB .pdf).

The video displays an HDRv sequence of a mirror sphere (left) capturing a panoramic image of the environment. Each HDR video frame accurately captures the scene ligthing in the scene and is used as source of illumination in the corresponding rendering to the right.

The image below displays one of the first renderings using captured and reconstructed real world lighitng exhibiting strong spatial variations. The lighting was captured using around 600 HDR light probe images distributed along a tracked 1D path in space. The tracking information makes it possible to geometrically relate the light probes and reconstruct the sharp shadows created by a blocker in front of the projector light source.

What is IBL?

Image based lighting (IBL) (Wikipedia) is a technique for capturing real world lighting conditions and use this information to create photo-realistic renderings of virtual objects such that they appear to placed into the real scene. The real world lighting is usually captured as a single 360 degree panoramic HDR image. Since the HDR image covers the full dynamic range in the scene, the captured information can be used as a measurement of the ligthing conditions. Since the image is captured as a panorama, it descibes the lighting incident from any direction onto the capture position.

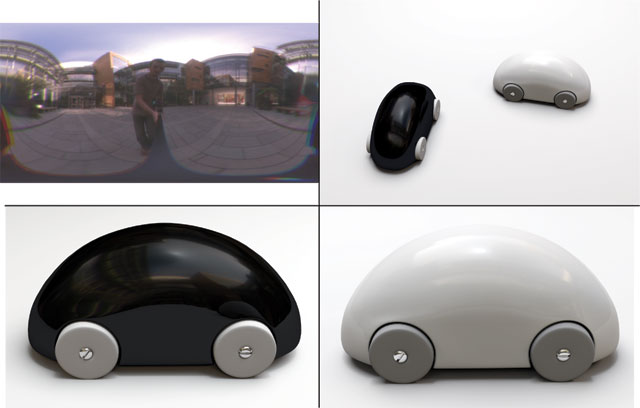

The image displays an IBL example with the panoramic HDR image in the top left and three renderings where the captured lighting

information is used to illuminate the virtual toy cars. The panoramic HDR image is displayed as a so called latitude longitude panorama,

which is a nice format for viewing omni-directional images.

The equipment and methods described above extend this technique to include temporal or spatial variations in the captured lighting

environment.